The meta robots tag is a powerful and precise tool that gives webmasters page-level control over how search engines crawl and index their content. While the more commonly known robots.txt file provides site-wide crawling suggestions, the meta robots tag offers granular, page-by-page directives that are essential for a sophisticated SEO strategy. Mastering the use of this tag is a non-negotiable skill for any professional looking to manage a website’s search presence effectively. This guide will explore nine proven strategies for using the meta robots tag to achieve specific and impactful SEO outcomes.

Many webmasters have a surface-level understanding of the meta robots tag, often limited to the noindex directive. However, this powerful tag offers a much wider range of commands that can be used to sculpt a site’s index, control how its snippets appear in the search results, and manage the flow of link signals. The following sections will provide a deep dive into the most important directives and the strategic scenarios in which they should be deployed, transforming this simple line of code into a cornerstone of a technically sound optimization program.

The Fundamental Role of the Meta Robots Tag

Before exploring the specific strategies, it is crucial to understand what the meta robots tag is and its fundamental role in technical SEO. This foundational knowledge is key to using it correctly and avoiding potentially catastrophic errors.

What is the Meta Robots Tag?

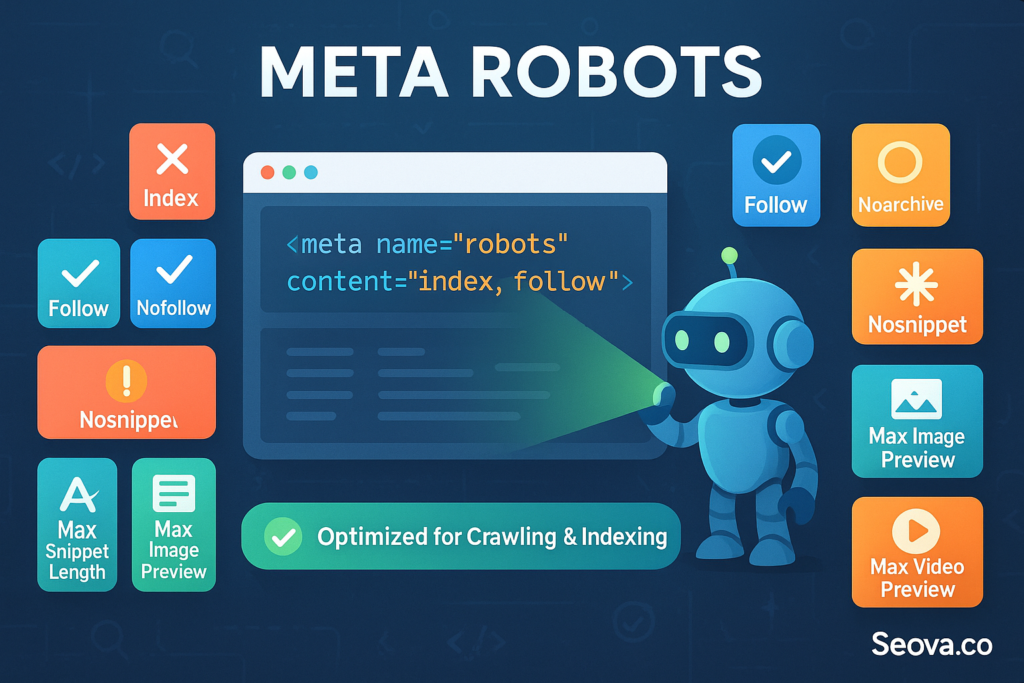

The meta robots tag is a piece of HTML code that is placed in the <head> section of a webpage. Its purpose is to provide instructions to search engine crawlers (bots) about how to handle that specific page. The basic syntax is <meta name="robots" content="directive1, directive2">. The name="robots" attribute indicates that the instruction is for all search engines, while the content attribute contains the specific command or commands.

Meta Robots vs. Robots.txt: The Critical Difference

This is one of the most important distinctions in technical SEO. A robots txt file is a suggestion to a search engine bot not to crawl a specific URL or directory. The meta robots tag, on the other hand, provides instructions on how to index or serve a page that it has already crawled. If a page is blocked by robots.txt, the crawler will never see the meta robots tag on that page. Therefore, these two tools must be used in harmony. The meta robots tag is the correct tool for controlling indexing, not robots.txt.

Why Page-Level Control is So Important

The primary power of the meta robots tag lies in its specificity. It allows a webmaster to apply a rule to a single page without affecting any other page on the site. This granular control is essential for managing the diverse types of content that exist on a modern website. It is a key tool for ensuring that only the most valuable, high-quality pages are presented to search engines for indexing, which is a core principle of building a seo friendly website.

9 Proven Strategies for the Meta Robots Tag

A strategic approach to the meta robots tag involves understanding its various directives and knowing when to apply them. The following nine strategies cover the most important and impactful use cases, from the most common to the more advanced.

Strategy #1: Master the noindex Directive to Sculpt Your Index

The noindex directive is the most well-known and powerful command for the meta robots tag. It instructs search engines not to include the page in their index.

The “Noindex” Directive Explained

When a search engine crawls a page and sees the noindex directive, it will drop that page from its search results. This is a directive, not a hint, and is respected by all major search engines. It is the definitive way to prevent a page from appearing in the SERPs.

Strategic Use Cases for noindex

The noindex tag is not for your important, high-value pages. It is a strategic tool for “index sculpting”—the practice of preventing low-value or duplicate pages from being indexed. This helps search engines to focus their resources on your most important content. Common use cases include:

- Thin Content Pages: Pages with very little unique content.

- Internal Search Results: The pages generated by a website’s own search bar.

- Thank You Pages: The confirmation pages that users see after submitting a form.

- Admin and Login Pages: Secure pages that are not intended for the public.

- Filtered Pages: E-commerce pages with many applied filters that create duplicate content.

Strategy #2: Use the nofollow Directive to Control Link Signals

The nofollow directive instructs search engines not to pass any link equity or ranking signals through the links on a page.

The “Nofollow” Directive Explained

When this directive is applied to a page, it tells search engines not to “endorse” any of the outbound or internal links found on that page. This is a page-level command that applies to all links on the page. It is different from the rel="nofollow" attribute, which can be applied to individual links.

Strategic Use Cases for nofollow

The page-level nofollow is used in situations where a webmaster cannot or does not want to vouch for the quality of the links on the page. The most common use case is for pages with a large amount of user-generated content, such as forum threads or blog comment sections, where there is a risk of users posting spammy links.

Strategy #3: Combine noindex, follow for Strategic De-indexing

This combination is one of the most useful and common strategies for managing a site’s architecture over time.

The Power of the Combination

The directive <meta name="robots" content="noindex, follow"> is a powerful instruction. It tells search engines two things: “Do not include this page in your index,” but “Do follow all the links on this page and pass their equity to the pages they point to.”

Strategic Use Cases

This combination is ideal for de-indexing pages that are no longer valuable in their own right but still link to other important pages. A great example is an old blog category or tag page that is being deprecated. By using noindex, follow, a webmaster can remove the low-value archive page from the index while still ensuring that search engines can discover and pass value to the valuable articles that are linked from it.

Strategy #4: Prevent Caching with the noarchive Directive

The noarchive directive is a simple instruction that prevents search engines from storing and showing a cached version of a page.

The “Noarchive” Directive Explained

Normally, search engines will store a cached copy of a page that users can access from the search results. The noarchive tag tells them not to do this. When it is used, the “Cached” link will not appear for that page in the SERPs.

Strategic Use Cases

This directive is useful for pages where the content is very time-sensitive and a cached, outdated version could be misleading. A common use case is for pages with frequently changing pricing information or limited-time offers.

Strategy #5: Control SERP Snippets with nosnippet

The nosnippet directive gives a webmaster control over how their page is presented in the search results.

The “Nosnippet” Directive Explained

This directive tells search engines not to show any text snippet or video preview for the page in the search results. The SERP listing will only show the title tag and the URL.

Strategic Use Cases

This can be a strategic choice for some publishers who have a strong brand and believe that a “blind” headline will entice more users to click through to their site to read the content. It prevents users from getting their answer directly from the meta description or an auto-generated snippet in the SERP.

Strategy #6: Manage Image Previews with max-image-preview

This directive provides specific control over how a page’s images are displayed in the search results.

The Image Preview Directive Explained

The max-image-preview directive allows a webmaster to specify the maximum size of an image preview to be shown for their page. The options are none (no image preview), standard (a default-sized preview), or large (the largest possible preview).

Strategic Use Cases

For visually driven websites, such as photography blogs or e-commerce stores, setting this directive to large can be a powerful strategy. A large, high-quality image preview can make a search result much more visually appealing and can significantly increase its click-through rate.

Strategy #7: Set a “Disappearance” Date with unavailable_after

This is a highly useful directive for content that has a specific expiration date.

The “Unavailable_after” Directive Explained

The unavailable_after directive allows a webmaster to specify an exact date and time after which a page should no longer appear in search results. This is a much more efficient way to handle temporary content than manually adding a noindex tag on the expiration date.

Strategic Use Cases

This is perfect for pages about events that have a specific end date, limited-time promotional offers, or job postings that are only open for a set period. It automates the process of removing time-sensitive content from the index.

Strategy #8: Use Bot-Specific Directives for Granular Control

While the standard <meta name="robots"...> tag applies to all search engines, it is also possible to give instructions to a specific search engine bot.

Targeting Specific User-Agents

This is done by changing the name attribute of the tag. For example, <meta name="googlebot" content="..."> would provide instructions that only apply to Google’s main crawler. This allows for a very granular level of control if, for some reason, a webmaster wants to give different instructions to different search engines.

Strategy #9: Understand the X-Robots-Tag for Non-HTML Files

The meta robots tag can only be placed in the <head> section of an HTML document. This means it cannot be used for other file types. The X-Robots-Tag is the solution to this problem.

What is the X-Robots-Tag?

The X-Robots-Tag is a way to deliver the exact same meta robots directives, but in the HTTP header of a server response instead of in the HTML.

Strategic Use Cases

This is essential for controlling the indexing of non-HTML files. For example, a webmaster could use the X-Robots-Tag to noindex a PDF document or an image file that they do not want to appear in search results.

When implementing these tags, it’s vital they don’t conflict with other technical signals. For instance, a noindex tag on a page that is part of an hreflang tags cluster can break the international setup. Similarly, it’s important to ensure that a page with a noindex directive does not have a conflicting canonical tags pointing to it from another page. These tags are a critical part of the overall family of seo meta tags.

A Precision Instrument for Technical Control

The meta robots tag is a precision instrument in the technical SEO toolkit. It provides a level of granular, page-by-page control that is essential for managing a modern, complex website. A strategic and accurate use of its various directives allows a webmaster to sculpt their site’s presence in the search index, control how their content is presented, and ensure that search engines are focusing their resources on the most valuable pages. While it may seem like a small piece of code, mastering the nine proven strategies for the meta robots tag is a non-negotiable skill for any professional who is serious about achieving technical excellence and driving SEO success.

Frequently Asked Questions About the Meta Robots Tag

What is a meta robots tag?

It is an HTML tag that provides instructions to search engine crawlers on how to index or serve a specific webpage. It is a powerful tool for page-level control.

How do I use the noindex tag?

You use the noindex tag by adding <meta name="robots" content="noindex"> to the <head> section of any page that you want to exclude from the search engine’s index.

What is the difference between noindex and nofollow?

Noindex prevents a page from being included in the search results. Nofollow allows the page to be indexed but tells search engines not to pass any value through the links on that page.

What is the difference between meta robots and robots.txt?

Robots.txt is a file that suggests which pages a search engine should not crawl. The meta robots tag is a directive on a page that has been crawled that tells a search engine how to index it. To de-index a page, a search engine must be allowed to crawl it to see the noindex tag.

Can I use multiple meta robots tags on one page?

No, you should only use one meta robots tag on a page. If there are multiple, conflicting tags, the search engine will use the most restrictive one. For more general advice, you can review some popular seo tips.