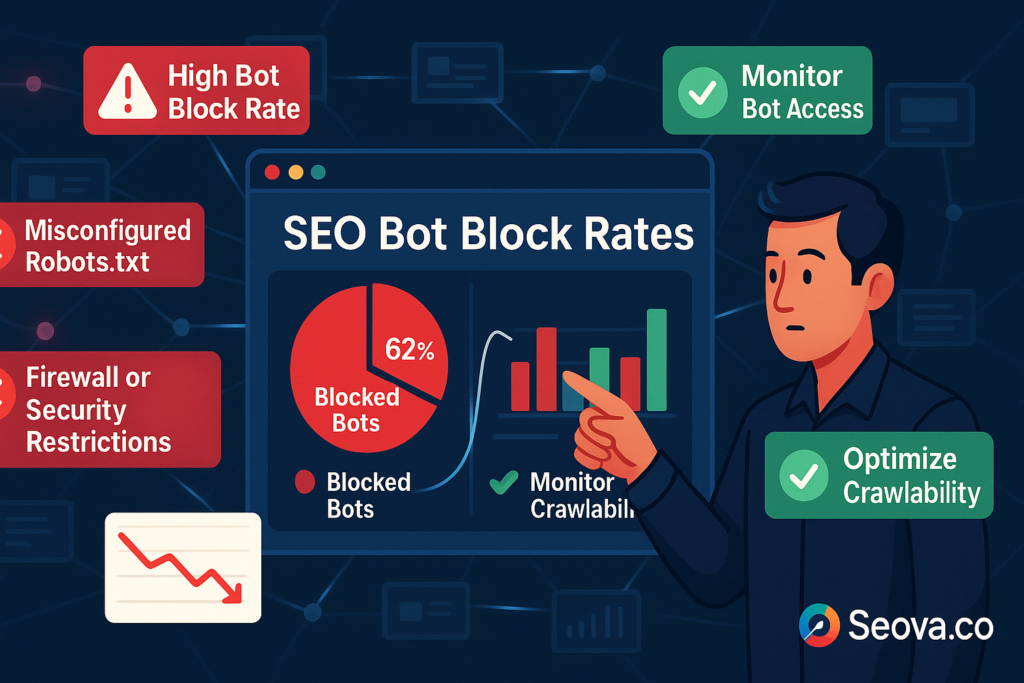

Understanding and managing SEO bot block rates is a critical, yet often hidden, aspect of technical SEO that can severely impact a website’s ability to be crawled and indexed. This metric refers to the percentage of time a legitimate search engine crawler is blocked when trying to access a site’s URLs. Many webmasters are completely unaware that their own security systems or server limitations are actively turning away the very bots they need to attract for organic visibility. This guide will explain what SEO bot block rates are, why they are so damaging, and how to check for and fix this shocking and destructive issue.

A high bot block rate is a silent killer of SEO performance. The site may appear to be functioning perfectly for human users, while in the background, search engine crawlers are being denied access. This can lead to a cascade of negative consequences, from a wasted crawl budget to widespread indexing problems. Proactively monitoring and minimizing these block rates is a hallmark of an advanced technical SEO strategy. The following sections will provide a deep dive into the definition, causes, impact, diagnosis, and solutions related to this critical and often misunderstood metric.

What Are SEO Bot Block Rates? A Definitive Explanation

Before you can fix the problem, you must first understand it. SEO bot block rates are a specific and measurable indicator of a website’s accessibility to search engines. A high rate is a clear signal of a significant underlying technical problem.

Defining the Term

SEO bot block rates are the percentage of requests from a verified, legitimate search engine bot (such as Googlebot) that are met with a “blocking” response from a website’s server. A block is typically a 4xx status code (like a 403 Forbidden) or a 5xx server error. It means the server received the request from the bot but refused or was unable to fulfill it. This is different from a 404 “Not Found” error, which simply means the requested page does not exist.

The Difference Between Intentional and Unintentional Blocks

It is important to distinguish between a “disallow” and a “block.” A Disallow directive in a robots.txt file is an intentional request for a bot not to crawl a URL. A “block,” on the other hand, is an unintentional and forceful denial of access when a bot tries to crawl a URL that it is otherwise allowed to access.

Why This is a “Hidden” Problem

The primary reason why high SEO bot block rates are so dangerous is that they are often completely invisible to the website’s owners and users. The site may load perfectly for all human visitors. The only way to discover that legitimate bots are being blocked is by analyzing the raw server log files, which is a process that many webmasters overlook.

The Devastating Impact of High SEO Bot Block Rates

A persistently high bot block rate can have a devastating and compounding impact on a website’s SEO performance. It creates a series of negative feedback loops that can starve a site of the crawl activity it needs to rank.

A Direct Hit to Crawlability

The most immediate and obvious impact is on crawlability. If a search engine bot is blocked from accessing a page, it cannot crawl that page’s content. This means that any new content or updates on that page will not be discovered by the search engine. This has a direct and severe negative impact on a site’s crawl budget.

Negative Signals to Search Engines

High SEO bot block rates send a very strong negative signal to search engines. It tells them that the website’s server is unreliable, unstable, or overloaded. In response to this signal, a search engine like googlebot will automatically slow down its crawl rate for the entire site. It does this to avoid causing further problems for the struggling server. This “self-throttling” means that the entire site will be crawled less frequently.

Widespread Indexing Issues

This combination of direct blocks and a reduced overall crawl rate can lead to a cascade of indexing problems. Pages that were once indexed may be dropped due to a perceived lack of stability. New pages may take an extremely long time to be indexed. It is also a very common cause of a large number of pages getting stuck in the “discovered currently not indexed” status in Google Search Console.

Creation of Orphan Pages

If a bot is blocked from crawling a key category or hub page, it will be unable to follow the links on that page to discover the sub-pages. This can leave entire sections of a website undiscoverable, effectively turning them into orphan pages.

The Common Causes of High SEO Bot Block Rates

High SEO bot block rates are almost always caused by a misconfiguration in a website’s security or server infrastructure. These systems are designed to protect the site from malicious traffic, but they can often be overly aggressive.

- Overly Aggressive Firewalls and Security Plugins: Web Application Firewalls (WAFs) and security plugins are designed to block suspicious traffic. However, they can sometimes misidentify the rapid, high-volume crawling of a legitimate search engine bot as a malicious attack and begin to block its requests.

- Poorly Configured CDNs: A Content Delivery Network (CDN) can sometimes be the source of the blocks. The security settings on the CDN may be too strict, or it may be configured to block traffic from certain geographic regions where search engine bots are located.

- Server Overload: If a website is on an underpowered hosting plan, a high volume of requests from a search engine bot can be enough to overwhelm the server’s resources (CPU and RAM). This can cause the server to start returning 5xx errors and blocking further requests.

- Incorrect IP Whitelisting/Blacklisting: In some cases, a server administrator may have accidentally included a range of IP addresses used by a search engine bot in a security blacklist.

- Aggressive Anti-Scraping Tools: Tools that are designed to prevent content scraping can often be too aggressive and can end up blocking the very search engine bots that a site needs to allow.

The Diagnostic Process: Uncovering Block Rates with Log File Analysis

The only definitive way to diagnose and measure a site’s bot block rate is through a detailed analysis of its server log files.

Why Server Logs are the Only Source of Truth

Server logs contain a record of every single request made to a server, including the user-agent of the requestor and the HTTP status code that was returned. This is the raw data that shows exactly how a site is responding to search engine bots.

Step 1: Obtain Your Server Log Files

The first step is to get access to the raw server log files. This usually involves requesting them from the website’s hosting provider or server administrator.

Step 2: Ingest and Process the Log Data

Log files can be massive, often containing millions of lines of data. They need to be processed using a specialized log file analyzer tool or by importing them into a spreadsheet or database for analysis.

Step 3: Isolate Legitimate Search Engine Bot Traffic

It is essential to verify that the traffic being analyzed is from a legitimate search engine bot and not from a fake bot that is pretending to be one. This is done by performing a reverse DNS lookup on the IP addresses in the log file to confirm that they belong to a real search engine.

Step 4: Calculate the Block Rate

Once the legitimate bot traffic has been isolated, the block rate can be calculated. This is done by dividing the number of requests that resulted in a blocking status code (typically a 403 or a 5xx) by the total number of requests from that bot. Any block rate that is consistently above 1-2% is a sign of a serious problem that needs to be investigated. This is a key part of any deep technical seo audit.

Step 5: Segment the Data to Find the Cause

To find the root cause of the blocks, the data should be segmented. Are the blocks happening on a specific subdirectory of the site? Are they happening at a particular time of day? Analyzing these patterns can provide clues about the underlying issue.

Proven Strategies to Reduce Block Rates and Improve Crawlability

Once a high SEO bot block rate has been diagnosed, there are several proven strategies to fix the problem.

Whitelisting Legitimate Bot IP Ranges

The most direct solution is to work with the server administrator or hosting provider to whitelist the known, published IP address ranges of major search engine bots like Googlebot. This tells the firewall and other security systems to always allow traffic from these verified sources.

Configure Firewalls and Security Plugins Correctly

Review the settings of all security tools. Most professional-grade firewalls and security plugins have options to automatically detect and allow verified search engine bots. It is crucial to ensure that these settings are enabled.

Upgrade Server and Hosting Resources

If the analysis shows that the blocks are primarily 5xx server errors and are correlated with high crawl activity, it is a strong sign that the server is overloaded. The only long-term solution is to upgrade to a more robust hosting plan with more CPU and RAM.

Optimize Crawl Efficiency

Another way to reduce the strain on a server is to make the crawl process more efficient. By identifying and fixing the sources of crawl waste on a site, such as the thousands of duplicate URLs that can be generated by faceted navigation, a webmaster can reduce the total number of URLs that a bot needs to crawl. This lowers the overall number of requests and can reduce server load.

Conclusion

High SEO bot block rates are one of the most dangerous and insidious issues in technical SEO. They are an unseen barrier that can silently suffocate a website’s ability to be discovered and indexed by search engines. A commitment to regularly monitoring server logs and maintaining a low block rate is a hallmark of an advanced and highly professional SEO program. By ensuring that legitimate search engine bots have unimpeded access to a site’s content, webmasters can remove a major obstacle to their ranking potential and build a foundation for a healthier and more visible website.

Frequently Asked Questions About SEO Bot Block Rates

What is a bot block rate?

A bot block rate is the percentage of requests from a legitimate search engine crawler that are denied access by a website’s server, typically resulting in a 4xx or 5xx error code.

How do I know if I am blocking search engine bots?

The only reliable way to know is to perform a server log file analysis. This will show you exactly how your server is responding to requests from search engine bots.

Why would a website block Googlebot?

A website almost never blocks Googlebot intentionally. It is usually an unintentional consequence of an overly aggressive firewall, security plugin, or an underpowered server that is misidentifying the bot’s rapid crawling as a threat.

Is a 403 error bad for SEO?

Yes, a 403 “Forbidden” error is very bad for SEO if it is being served to a search engine bot. It prevents the bot from crawling the page.

How do I unblock Googlebot?

You can unblock Googlebot by working with your server administrator to whitelist its known IP addresses and by correctly configuring your firewall and other security settings to allow verified crawlers to pass. This is a key part of any comprehensive Search engine marketing strategy.