Faceted navigation is a powerful tool for improving user experience on e-commerce and listing sites, but its improper handling is one of the most common causes of severe technical SEO issues. Also known as faceted search or filtering, this functionality allows users to refine and sort a large set of results based on multiple attributes. While it is indispensable for usability, it can create a near-infinite number of low-value, duplicate URLs that can cripple a website’s SEO performance. This guide will provide proven, SEO-friendly solutions to tame the complexity of faceted navigation.

For any large e-commerce or marketplace website, the stakes are incredibly high. A poorly managed faceted navigation system can waste the entire crawl budget, create massive amounts of duplicate content, and dilute ranking signals, preventing a site’s most important pages from being found. A strategic and technically sound approach is not just a best practice; it is a requirement for survival and success in a competitive search landscape. The following sections will provide a deep dive into the problems faceted navigation causes and a detailed breakdown of the technical solutions to manage it effectively.

What is Faceted Navigation? A Dual-Edged Sword

Faceted navigation is a true dual-edged sword. It is simultaneously one of the most valuable features for users and one of the most dangerous features for SEO. Understanding this duality is the first step toward finding a balanced and effective solution.

The User Experience Benefit

From a user’s perspective, faceted navigation is essential. Imagine an online clothing store with thousands of products. A user looking for a specific item can use the facets—or filters—to quickly narrow down the results. They can filter by size, color, brand, price range, and more. This ability to refine a large set of items into a manageable selection is a cornerstone of a positive user experience on any large listing site.

The SEO Nightmare: The URL Explosion

The technical problem arises from how most faceted navigation systems are implemented. Each time a user clicks on a filter, a new URL is often generated, typically by adding a parameter to the base URL. For example, a user filtering for blue shoes in a specific size might generate a URL like /shoes?color=blue&size=10.

While this is not a problem for a single user, it creates a massive issue for search engines. If a site has ten different filter categories, each with ten options, the number of possible combinations can quickly run into the thousands or even millions. This is the URL explosion.

The Two Deadly SEO Consequences

This URL explosion has two direct and devastating consequences for a website’s SEO performance.

1. Massive Duplicate Content: The vast majority of these generated URLs show content that is only slightly different from the main category page or from each other. Search engines see this as a massive internal duplicate content problem. This confuses them about which page to rank and severely dilutes the authority of the main category page.

2. Catastrophic Crawl Budget Waste: Search engine crawlers like googlebot will attempt to crawl every unique, crawlable URL they find. When a site has millions of low-value faceted URLs, the crawler will spend its entire crawl budget on these unimportant pages. This means it may not have the resources to crawl and index the site’s most important content, such as new product pages or blog posts. This can lead to important pages being stuck in the “discovered currently not indexed” status.

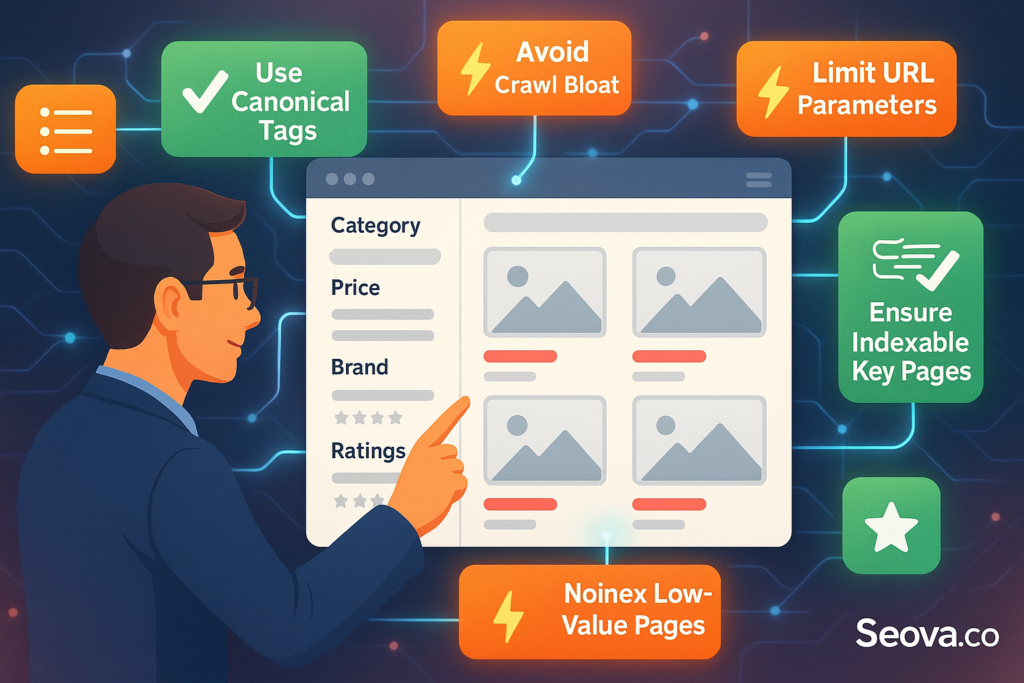

Proven SEO-Friendly Solutions for Faceted Navigation

Taming the complexity of faceted navigation requires a deliberate and multi-layered technical SEO strategy. There is no single “magic bullet” solution; the best approach often involves a combination of different techniques. The goal is always to provide the filtering functionality to users while preventing search engines from crawling and indexing the vast majority of the resulting URLs.

Solution #1: The AJAX Approach (Without URL Changes)

One effective way to handle faceted navigation is to use AJAX to load the filtered content without changing the URL in the browser’s address bar.

How it Works

In this setup, when a user clicks on a filter, a script runs in the background to fetch the new set of products and display them on the page. The URL of the page itself does not change. Because no new, unique URLs are generated, there are no new pages for search engines to crawl.

Pros and Cons

This method is excellent for SEO as it completely prevents the URL explosion problem. However, its main drawback is that it is not ideal for users, as they cannot bookmark or share a link to their specific filtered view of the page.

Solution #2: The Canonical Tag Approach

Another common approach is to allow the faceted URLs to be generated, but to use a canonical tag to signal the preferred version to search engines.

How it Works

In this model, a rel="canonical" tag is placed on all the filtered, parameterized URLs. This tag points back to the main, unfiltered category page. This tells search engines, “While this filtered page exists, the main category page is the master version that you should index and rank.”

Pros and Cons

This is a relatively simple solution that helps to consolidate ranking signals to the main category page. However, it does not solve the crawl budget problem. Search engines will still need to crawl all the faceted URLs to see the canonical tag, which can still waste a significant amount of the crawl budget.

Solution #3: The noindex Meta Robots Tag Approach

This solution involves using the noindex meta robots tag to prevent the filtered pages from appearing in the search index.

How it Works

A rule is created to programmatically add a <meta name="robots" content="noindex"> tag to the HTML head of any URL that is generated by a filter. This is a direct command to search engines not to include that page in their index.

Pros and Cons

This is a very effective way to keep the search results clean and to prevent duplicate content from being indexed. However, like the canonical solution, it does not prevent the pages from being crawled, so it is not a solution for crawl budget waste.

Solution #4: The Robots.txt Disallow Approach

For a direct approach to solving the crawl budget problem, the robots.txt file can be used.

How it Works

The robots.txt file can be configured to disallow crawling of any URL that contains a specific filter parameter. For example, a rule like Disallow: /*?color= would block crawlers from accessing any URL that has the “color” filter applied.

Pros and Cons

This is the most effective method for preserving crawl budget. However, it is a blunt instrument. If a blocked URL happens to have valuable backlinks pointing to it, the authority from those links will be lost because the crawler cannot access the page.

Solution #5: The Hybrid “Gold Standard” Approach

For most large websites, the best and most robust solution is a hybrid approach that intelligently combines several of the techniques above. This allows for a nuanced strategy that balances SEO needs with user experience.

The Step-by-Step Hybrid Strategy

First, a business must identify any specific faceted URLs that actually have significant search demand. For example, a page for “blue running shoes” might be a combination of a category and a color filter, but it could be a valuable landing page to have indexed.

Second, for all other, non-valuable filter combinations, the URL parameters should be blocked from crawling in the robots.txt file. This is the primary defense against crawl budget waste.

Third, as a backup measure, a noindex tag should be applied to all non-valuable faceted URLs. This ensures that even if a crawler finds one of these URLs through an external link, it will not be indexed.

Finally, for parameters that do not significantly change the content, such as those used for sorting (?sort=price), a canonical tag pointing to the clean, unsorted version of the page is the best solution. This entire process is a core part of any professional technical seo audit.

Auditing and Monitoring Your Implementation

Implementing a solution for faceted navigation is not a one-time task. It requires ongoing monitoring and auditing to ensure that it is working correctly and to catch any new issues that may arise.

Using Site Crawlers

A site crawling tool is essential for auditing faceted navigation. The crawler should be configured to discover and analyze all the parameterized URLs that are being generated. This can help to identify issues like broken links in the filter system or incorrect canonical tag implementations.

Analyzing Google Search Console Reports

Google Search Console provides several key reports for monitoring this issue. The Crawl Stats report can show if there is a sudden spike in the number of pages being crawled, which can be a sign of a problem. The Coverage report can show if a large number of faceted URLs are being indexed.

Server Log File Analysis

The ultimate source of truth for understanding how a search engine is interacting with a faceted navigation system is a server log file analysis. By analyzing the raw logs, an SEO professional can see exactly which faceted URLs are being crawled by Googlebot and how much of the site’s crawl budget they are consuming. This can also reveal high seo bot block rates, which could be a sign that the faceted URLs are overloading the server.

The Key to Scalable E-commerce SEO

Faceted navigation is a powerful and essential feature for user experience on any large listing website. However, it presents one of the most severe and complex challenges in all of technical SEO. Taming this complexity is a hallmark of an advanced and highly effective optimization strategy. By implementing a strategic, multi-layered solution that intelligently controls crawling and indexing, businesses can provide a great filtering experience for their users without sacrificing their SEO performance. A well-managed faceted navigation system is a key to scalable and sustainable success for any large e-commerce or marketplace website.

Frequently Asked Questions About Faceted Navigation

What is faceted navigation?

Faceted navigation is a system that allows users on a website, typically an e-commerce site, to filter and refine a large list of items based on multiple attributes or “facets,” such as price, color, size, and brand.

Is faceted navigation bad for SEO?

Faceted navigation is only bad for SEO if it is implemented incorrectly. If not managed properly, it can create massive duplicate content and crawl budget issues that can be very damaging to a site’s performance.

How do you handle faceted navigation for SEO?

The best approach is typically a hybrid one. First, identify any valuable filtered pages that should be indexed. Then, use the robots.txt file to block the crawling of all other, non-valuable filtered URLs to save crawl budget. Use a noindex tag as a backup to prevent them from being indexed.

What is the difference between filtering and faceting?

The terms are often used interchangeably. Generally, “filtering” refers to reducing a set of results based on a single attribute, while “faceting” refers to a more advanced system that allows for filtering across multiple attributes simultaneously.

Should I noindex or canonicalize filtered pages?

For most non-valuable filtered pages, a combination of blocking them in robots.txt and using a noindex tag is the most robust solution. A canonical tag is often better for parameters that do not significantly change the page content, like a sort order. This is a key part of any Search engine optimization strategy. For more general advice, you can review some popular seo tips.