Googlebot is the generic name for Google’s web crawler, the sophisticated software that discovers, crawls, and indexes the content of the web to make it searchable. For anyone involved in search engine optimization, understanding how Googlebot works is not just a technical curiosity; it is a fundamental requirement for success. All SEO efforts, from creating content to building a site’s architecture, are ultimately aimed at making Googlebot’s job as easy and efficient as possible. This essential guide will provide a deep dive into the crawling and indexing process and what it means for your website.

A website’s visibility in the search results is entirely dependent on its relationship with Googlebot. If the crawler cannot find, access, or understand a site’s content, that site simply will not rank. A professional approach to SEO is therefore built upon a foundation of making a site perfectly accessible and intelligible to this crucial visitor. The following sections will explore what Googlebot is, how its crawling process works step-by-step, the different types of crawlers, and how webmasters can effectively manage its interaction with their website.

The Core Function of Googlebot: Discovering the Web

At its core, Googlebot is a discovery engine. Its primary function is to constantly explore the vast landscape of the internet, finding new and updated content to add to Google’s massive search index. This process can be broken down into three main tasks.

Defining the Crawler

A web crawler, also known as a spider or a bot, is an automated program that systematically browses the web. Googlebot is the most well-known and sophisticated of these crawlers. It does not surf the web randomly; it follows links from page to page in a methodical way, collecting data as it goes.

The Three Main Tasks: Crawl, Process, Index

The work of Googlebot can be understood as a three-stage pipeline:

- Crawl: The first step is to visit, or “crawl,” billions of pages on the web. This involves making a request to a web server and downloading the content of the page.

- Process: Once a page has been crawled, its content and code are processed and analyzed. This is where Google’s systems work to understand what the page is about, a process that now includes rendering the page to see it as a human user would.

- Index: The processed information from the page is then stored and organized in the Google Search Index. When a user performs a search, Google sifts through this index to find the most relevant results.

Why Googlebot is So Important for SEO

The importance of Googlebot cannot be overstated. A website only becomes eligible to appear in Google’s search results after it has been successfully crawled and indexed. If there are any barriers that prevent Googlebot from accessing a site’s content, that content will remain invisible to searchers. Therefore, a core part of technical seo is about removing these barriers.

The Googlebot Crawling Process Explained Step-by-Step

The process by which Googlebot finds and crawls a website is a highly sophisticated and continuous cycle. Understanding each step in this journey is key to diagnosing and fixing potential issues.

Step 1: Discovering URLs

Googlebot must first discover that a URL exists before it can crawl it. It does this in two primary ways:

- Following Links: The main way Googlebot finds new pages is by following the links from pages it has already crawled.

- Reading Sitemaps: Webmasters can provide a direct list of all their important URLs by submitting an XML sitemap to Google Search Console. Reviewing good sitemap examples is key to this process.

Step 2: Adding URLs to the Crawl Queue

Once a URL is discovered, it is added to a massive, prioritized list called the crawl queue. Not all URLs are treated equally. A URL from a highly authoritative and frequently updated website will likely be placed higher in the queue than a URL from a brand-new, unknown site.

Step 3: Respecting Robots.txt

Before Googlebot attempts to crawl any URL from a host, it will first check that host’s robots.txt file. This file can contain directives that ask crawlers not to access certain pages or directories. Googlebot will respect these directives.

Step 4: The Fetch Request and Crawl Rate

When a URL’s turn comes up in the queue, Googlebot will make a request to the server to fetch the page’s content. The rate at which it does this is carefully managed to avoid overloading the website’s server. This is a key part of the crawl budget concept.

Step 5: Rendering the Page

For modern websites, this is a crucial step. Googlebot does not just read the raw HTML of a page. Its Web Rendering Service (WRS) loads the page in a headless browser to execute any JavaScript. This allows it to see the final, rendered version of the page as a human user would. A deep understanding of this process is the foundation of javascript seo.

Step 6: Sending Content for Indexing

After the page has been successfully crawled and rendered, its content is passed on to the indexing pipeline, where it is analyzed and stored in the Search Index, making it eligible to appear in search results.

The Different Types of Googlebot

“Googlebot” is a generic name that actually refers to a whole family of different crawlers, each with a specific purpose. These crawlers are identified by their “user-agent” name in server log files.

- Googlebot Smartphone: This is now the primary and most important crawler for the vast majority of websites. Due to mobile first indexing, Google predominantly uses this mobile crawler to see and evaluate web pages.

- Googlebot Desktop: This is the legacy desktop crawler. While it is still used, it is much less frequent than the smartphone crawler for most sites.

- Googlebot Image: This crawler is specifically designed to discover and index the images on a webpage.

- Googlebot Video: This crawler focuses on finding and understanding the video content on a site.

- Googlebot News: This is a specialized crawler for websites that have been approved for inclusion in Google News.

- AdsBot: This crawler is used to check the quality of the landing pages for Google Ads campaigns.

It is important to be able to distinguish real Googlebot traffic from fake bots that are pretending to be Googlebot. This is done by performing a reverse DNS lookup on the IP address of the requestor to verify that it originates from a Google-owned domain.

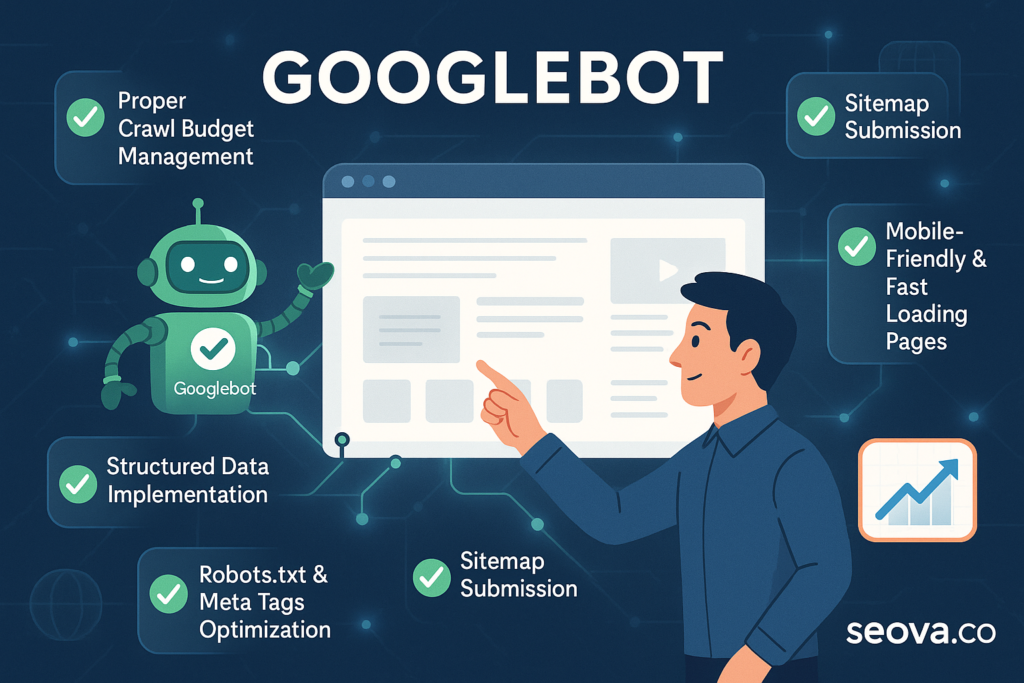

How to Control and Influence Googlebot

While webmasters do not have direct control over Googlebot, they have a powerful set of tools and signals that can be used to influence its behavior and guide its journey through a website.

Managing Crawl Access with Robots.txt

The robots.txt file is the most direct tool for controlling Googlebot. It allows a webmaster to tell the crawler which parts of a site it should not access. This is essential for preventing it from crawling low-value sections and for preserving the site’s crawl budget.

Controlling Indexing with the Meta Robots Tag

The meta robots tag provides page-specific instructions for Googlebot after it has crawled a page. The noindex directive is a powerful command that tells Googlebot not to include that specific page in the search index. This is the correct way to control indexing.

Guiding Discovery with XML Sitemaps

A clean, up-to-date XML sitemap is a powerful way to guide Googlebot’s discovery process. It provides a direct list of all the URLs a webmaster considers important, ensuring they are not missed.

Influencing Crawl Rate

A website’s speed and server health have a direct impact on how aggressively Googlebot will crawl it. A fast, responsive server will be able to handle a higher crawl rate. If a site’s server is being overwhelmed by crawl activity, a webmaster can also request a lower crawl rate in Google Search Console.

Common Problems and Troubleshooting Googlebot Issues

Many common technical SEO issues are related to problems with how Googlebot is interacting with a website.

Issue #1: High SEO Bot Block Rates

This is a critical and often hidden problem. It occurs when a website’s server or security systems mistakenly identify Googlebot as a threat and start blocking its requests. This can be diagnosed by analyzing server log files to check for a high percentage of 4xx or 5xx response codes being served to Googlebot. High seo bot block rates can be devastating for crawlability.

Issue #2: Pages are “Discovered – Currently Not Indexed”

This status in Google Search Console means that Googlebot is aware of a URL but has decided not to crawl and index it yet. This is often a sign of a crawl budget issue or a signal that Google perceives the page to be of low quality. Addressing the root cause is key to resolving the “discovered currently not indexed” problem.

Issue #3: Orphan Pages

Googlebot primarily discovers pages by following links. Orphan pages, which have no internal links pointing to them, are therefore invisible to the normal crawling process and are unlikely to be discovered or indexed.

The Role of the Technical SEO Audit

A comprehensive technical seo audit is essentially a health check of a website’s relationship with Googlebot. It is a systematic process of using tools like site crawlers and server log analyzers to find and fix all the issues that could be hindering Googlebot’s ability to effectively crawl, render, and index a site.

The Bridge Between Your Site and the SERPs

Googlebot is the essential bridge that connects a website to the search results page. A deep and practical understanding of how this complex system works is a prerequisite for any serious SEO professional. The ultimate goal of a technical SEO strategy is to make Googlebot’s job as easy and efficient as possible. By providing clear instructions through files like robots.txt and sitemaps, by ensuring the site is fast and technically sound, and by creating a logical architecture, webmasters can build a powerful foundation for visibility and lasting organic growth.

Frequently Asked Questions About Googlebot

What is Googlebot?

Googlebot is the web crawling bot used by Google to discover and index pages from the web. It is the essential first step in a page becoming eligible to appear in Google’s search results.

How does Googlebot find my website?

Googlebot finds websites by following links from other sites it has already crawled and by processing the XML sitemaps that webmasters submit through Google Search Console.

How can I see what Googlebot sees?

The URL Inspection Tool in Google Search Console is the best way to see what Googlebot sees. It allows you to enter a URL from your site and will show you the rendered HTML that Googlebot was able to see, along with any crawling or indexing issues.

How do I block Googlebot from my site?

You can ask Googlebot not to crawl specific parts of your site by using the Disallow directive in your robots.txt file. To prevent a page from being indexed, you should use the noindex meta robots tag.

How often does Googlebot crawl my site?

The crawl frequency depends on a site’s “crawl demand.” Important, popular sites that are updated frequently will be crawled much more often than small, static sites. This is a key part of any Search engine marketing strategy.