Optimizing the crawl budget is a critical aspect of technical SEO, especially for large and complex websites, ensuring that search engines can efficiently discover and index a site’s most important content. While it may sound like a highly technical and obscure concept, crawl budget is a finite resource that can have a significant impact on a website’s search performance. Wasting this resource on low-value or duplicate pages can prevent new, important content from being discovered and indexed in a timely manner. This guide will provide powerful hacks and proven strategies to help you maximize your crawl budget efficiency.

For many small websites, crawl budget is not a primary concern. However, for large e-commerce sites, publishers, and any website with thousands or millions of URLs, it becomes a central focus of the SEO strategy. A failure to manage it effectively can lead to a host of indexing problems and create a ceiling on a site’s organic growth potential. The following sections will provide a deep dive into what crawl budget is, why it matters, and a comprehensive list of actionable tactics to optimize it.

What is Crawl Budget? A Definitive Breakdown

To optimize the crawl budget, it is first essential to understand what it is. It is not a single, formally defined metric. Instead, it is a term used by SEO professionals to describe the combination of two core concepts that determine how a search engine crawls a website.

The Two Core Components

The idea of “crawl budget” is best understood as the result of two key factors:

Crawl Rate Limit: This is the maximum number of simultaneous connections a crawler can use to fetch content from a website without degrading the server’s performance for real users. Search engines are careful not to overload a website’s server. A faster, more responsive server will naturally have a higher crawl rate limit.

Crawl Demand: This is how much a search engine wants to crawl a particular website. Crawl demand is primarily driven by the site’s popularity (its authority and number of external links) and its freshness (how often new content is added or existing content is updated). A more popular and frequently updated site will have a higher crawl demand.

The combination of the server’s capacity and the search engine’s desire to crawl the site effectively determines the total number of URLs that will be crawled in a given period.

The Role of Googlebot

When discussing crawl budget, the primary crawler in question is googlebot, the web crawling bot for the world’s largest search engine. The entire practice of crawl budget optimization is about making Googlebot’s job as easy and efficient as possible, guiding it toward a site’s most valuable pages and away from the unimportant ones.

When Does Crawl Budget Matter?

It is important to note that crawl budget is not a major concern for every website. For a small, well-structured website with a few thousand pages or less, search engines are typically able to crawl and index all the important content without any issues. Crawl budget becomes a critical consideration for very large websites. This is a core focus of enterprise technical seo. Any site with millions of URLs, or sites that auto-generate a large number of pages (like e-commerce sites with faceted navigation), must have a proactive crawl budget management strategy.

The High Cost of a Wasted Crawl Budget

When a significant portion of a site’s crawl budget is wasted on low-value URLs, it can lead to several serious and damaging SEO problems. These issues can prevent a site from realizing its full potential in organic search.

Delayed Indexing of New Content

If a search engine crawler is spending most of its time crawling thousands of unimportant, parameterized URLs, it can take much longer for it to discover and index new, time-sensitive content, such as a new blog post or product launch page. This delay can have a significant business impact.

Infrequent Updates to Existing Content

A wasted crawl budget can also mean that a site’s most important existing pages are re-crawled less frequently. If a key service page is updated with new information, that change will not be reflected in the search results until the page is re-crawled. An inefficient crawl process can slow down the rate at which these updates are processed.

The “Discovered – Currently Not Indexed” Problem

One of the most common and frustrating statuses in Google Search Console is “discovered currently not indexed.” This means that Google has found the page but has decided not to crawl and index it at this time. While there can be several reasons for this, a strained crawl budget is often a contributing factor. The search engine may have decided that it does not have the resources to crawl the page or that other pages on the site are a higher priority.

Common Sources of Crawl Budget Waste

- Faceted Navigation: Creating a near-infinite number of duplicate URLs.

- URL Parameters: From tracking, sorting, or filtering.

- Duplicate Content: Multiple versions of the same page.

- Redirect Chains: Forcing crawlers to follow multiple hops.

- 404 Errors: Hitting dead ends.

- Thin or Low-Quality Content: Pages with little unique value.

How to Diagnose Crawl Budget Issues: Log File Analysis

The first step in optimizing the crawl budget is to diagnose where it is being spent. The only way to get this information with 100% accuracy is through server log file analysis.

The Power of Server Logs

A server log file is a record of every single request made to a website’s server. This includes requests from real users and, most importantly, requests from search engine bots. By analyzing these log files, an SEO professional can see exactly which URLs are being crawled, which user-agent is crawling them, and how frequently they are being accessed.

What to Look for in Your Logs

A detailed log file analysis can reveal a wealth of information about how a site’s crawl budget is being used. Key things to look for include:

- Crawl volume by bot type: How many requests are coming from Googlebot versus other bots?

- Most crawled URLs and directories: Is the crawler spending most of its time on the most important sections of the site?

- HTTP status codes: Is the crawler encountering a large number of 404 errors or redirect chains?

- Crawls on non-canonical URLs: Is the crawler wasting time on parameterized or duplicate URLs that should be consolidated?

This type of deep analysis is a cornerstone of any professional technical seo audit.

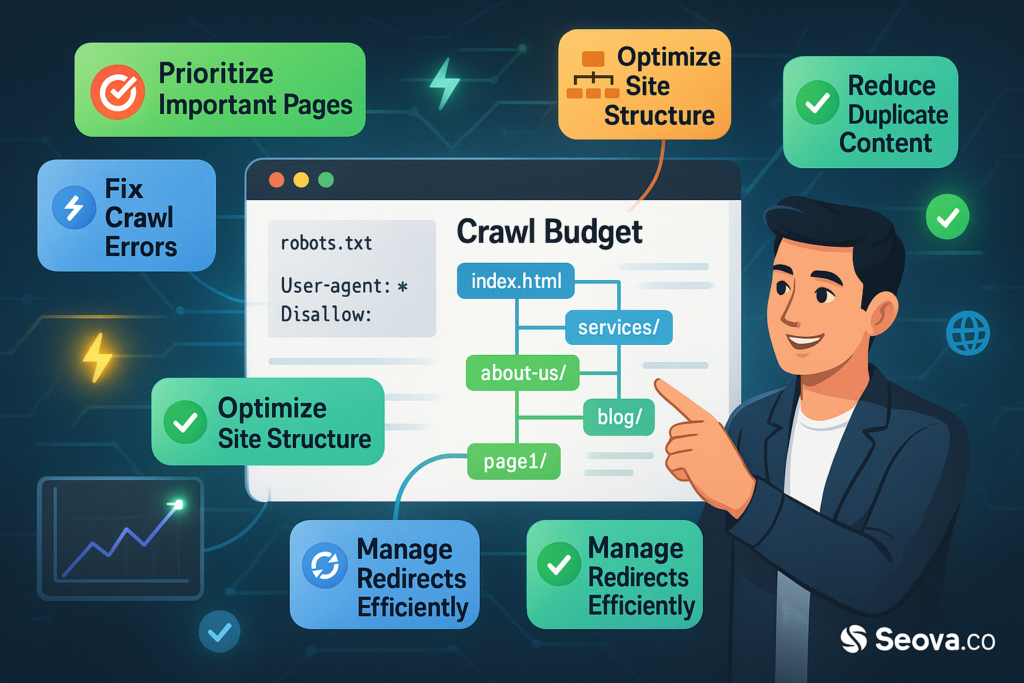

Powerful Hacks to Maximize Crawl Budget Efficiency

Once the sources of crawl waste have been identified, a series of powerful tactics can be employed to optimize the crawl budget and improve the efficiency of the crawl process.

Hack #1: Block Low-Value URLs with Robots.txt

The most direct way to save crawl budget is to use the robots.txt file to block search engines from crawling low-value sections of the site. This is a powerful tool that must be used with precision. Common candidates for being disallowed include URLs generated by on-site search, URLs with certain tracking parameters, and admin or login pages.

Hack #2: Use the noindex Tag Correctly

It is important to remember that robots.txt controls crawling, while the noindex meta tag controls indexing. A page that is noindexed but not blocked from crawling will still consume crawl budget. A common strategy is to allow a low-value page to be crawled once so the noindex tag can be seen, and then to block it in robots.txt in the future.

Hack #3: Manage URL Parameters Effectively

URL parameters from faceted navigation and tracking links are one of the biggest sources of crawl budget waste. In addition to blocking some parameterized URLs in robots.txt, it is also a best practice to use the URL Parameters tool in Google Search Console to tell Google how to handle specific parameters.

Hack #4: Fix Broken Links and Redirect Chains

Every time a search engine bot hits a 404 “Not Found” error, it is a wasted request. Similarly, a redirect chain, which forces a bot to follow multiple redirects to get to a final page, is highly inefficient. A key optimization task is to crawl the site to find and fix all broken links and to eliminate redirect chains.

Hack #5: Improve Site Speed and Server Response Time

A faster website allows a search engine crawler to request and download more pages in the same amount of time. Improving the server’s response time is a direct way to increase the crawl rate limit and, therefore, the overall crawl budget.

Hack #6: Maintain a Clean XML Sitemap

A clean and accurate XML sitemap is an important guide for search engines. The sitemap should only contain the final, canonical, 200 OK URLs that you want to be indexed. It should not contain any non-canonical URLs, redirected URLs, or broken links. Reviewing various sitemap examples can help ensure proper formatting.

Hack #7: Improve Your Internal Linking Structure

A strong and logical internal linking structure is a powerful signal to search engines about which pages on a site are the most important. Pages that are linked to frequently from other important pages on the site are more likely to be crawled more often. A good structure also helps to prevent orphan pages, which have no internal links and are difficult to discover.

Crawl Budget in the Context of Mobile and JavaScript

The modern web presents two additional layers of complexity for crawl budget management: the shift to mobile-first indexing and the rise of JavaScript-heavy websites.

The Impact of Mobile-First Indexing

With mobile first indexing, the primary crawler is the smartphone bot. This means that all crawl budget optimization efforts must be focused on the mobile version of the website. It is the mobile site’s performance, architecture, and configuration that will determine the crawl efficiency. A comprehensive mobile seo strategy is therefore inseparable from a crawl budget strategy.

How JavaScript Rendering Consumes Crawl Budget

For websites built with JavaScript frameworks, the crawling process is more resource-intensive. A search engine must not only download the initial HTML but must also execute the JavaScript to render the final content of the page. This rendering step consumes additional resources. An inefficient or poorly optimized JavaScript implementation can be a massive drain on a site’s crawl budget.

The Key to Scalable SEO Success

Crawl budget optimization is a critical and advanced discipline within technical SEO. For large and complex websites, it is the key to ensuring that search engines can efficiently process a site’s most valuable content. By moving from a passive to a proactive approach to managing how a site is crawled, businesses can solve a wide range of indexing problems and unlock their full potential in organic search. A strategy that is focused on eliminating waste, improving site speed, and building a strong internal architecture is a hallmark of a sophisticated and highly effective SEO program.

Frequently Asked Questions About Crawl Budget

What is crawl budget?

Crawl budget is a term used to describe the number of pages a search engine will crawl on a website in a given period of time. It is determined by a combination of the site’s server capacity (crawl rate limit) and its popularity and freshness (crawl demand).

Does my website need to worry about crawl budget?

For small websites with a few thousand pages or less, crawl budget is generally not a major concern. It becomes a critical factor for large websites with tens of thousands or millions of pages, or for sites that auto-generate many URLs.

How do I check my crawl budget?

The Crawl Stats report in Google Search Console is the best place to get an overview of how Google is crawling your site. For a much more detailed analysis, you need to perform a server log file analysis.

How do I increase my crawl budget?

You can increase your crawl budget by improving your site’s speed and server response time (which increases the crawl rate limit) and by consistently adding high-quality, popular content and earning backlinks (which increases crawl demand).

How do I optimize my crawl budget?

You optimize your crawl budget by making the crawl process more efficient. The key tactics are blocking low-value URLs with robots.txt, fixing broken links and redirect chains, and improving your site architecture and internal linking. The entire process is a key part of advanced technical seo.